For working professionals

For fresh graduates

- Study abroad

More

- Post Graduate Certificate in Data Science & AI (Executive)

- Gen AI Foundations Certificate Program from Microsoft

- Gen AI Mastery Certificate for Data Analysis

- Gen AI Mastery Certificate for Software Development

- Gen AI Mastery Certificate for Managerial Excellence

- Gen AI Mastery Certificate for Content Creation

- Post Graduate Certificate in Product Management from Duke CE

- Human Resource Analytics Course from IIM-K

- Global Master Certificate in Integrated Supply Chain Management

- Gen AI Foundations Certificate Program from Microsoft

- CSM® Certification Training

- CSPO® Certification Training

- PMP® Certification Training

- SAFe® 6.0 Product Owner Product Manager (POPM) Certification

- Post Graduate Certificate in Product Management from Duke CE

- Professional Certificate Program in Cloud Computing and DevOps

- Python Programming Course

- Executive Post Graduate Programme in Software Dev. - Full Stack

- AWS Solutions Architect Training

- AWS Cloud Practitioner Essentials

- AWS Technical Essentials

- The U & AI GenAI Certificate Program from Microsoft

1. Introduction

6. PyTorch

9. AI Tutorial

10. Airflow Tutorial

11. Android Studio

12. Android Tutorial

13. Animation CSS

16. Apex Tutorial

17. App Tutorial

18. Appium Tutorial

21. Armstrong Number

22. ASP Full Form

23. AutoCAD Tutorial

27. Belady's Anomaly

30. Bipartite Graph

35. Button CSS

39. Cobol Tutorial

46. CSS Border

47. CSS Colors

48. CSS Flexbox

49. CSS Float

51. CSS Full Form

52. CSS Gradient

53. CSS Margin

54. CSS nth Child

55. CSS Syntax

56. CSS Tables

57. CSS Tricks

58. CSS Variables

61. Dart Tutorial

63. DCL

65. DES Algorithm

83. Dot Net Tutorial

86. ES6 Tutorial

91. Flutter Basics

92. Flutter Tutorial

95. Golang Tutorial

96. Graphql Tutorial

100. Hive Tutorial

103. Install Bootstrap

107. Install SASS

109. IPv 4 address

110. JCL Programming

111. JQ Tutorial

112. JSON Tutorial

113. JSP Tutorial

114. Junit Tutorial

115. Kadanes Algorithm

116. Kafka Tutorial

117. Knapsack Problem

118. Kth Smallest Element

119. Laravel Tutorial

122. Linear Gradient CSS

129. Memory Hierarchy

133. Mockito tutorial

134. Modem vs Router

135. Mulesoft Tutorial

136. Network Devices

138. Next JS Tutorial

139. Nginx Tutorial

141. Octal to Decimal

142. OLAP Operations

143. Opacity CSS

144. OSI Model

145. CSS Overflow

146. Padding in CSS

148. Perl scripting

149. Phases of Compiler

150. Placeholder CSS

153. Powershell Tutorial

158. Pyspark Tutorial

161. Quality of Service

162. R Language Tutorial

164. RabbitMQ Tutorial

165. Redis Tutorial

166. Redux in React

167. Regex Tutorial

170. Routing Protocols

171. Ruby On Rails

172. Ruby tutorial

173. Scala Tutorial

175. Shadow CSS

178. Snowflake Tutorial

179. Socket Programming

180. Solidity Tutorial

181. SonarQube in Java

182. Spark Tutorial

189. TCP 3 Way Handshake

190. TensorFlow Tutorial

191. Threaded Binary Tree

196. Types of Queue

197. TypeScript Tutorial

198. UDP Protocol

202. Verilog Tutorial

204. Void Pointer

205. Vue JS Tutorial

206. Weak Entity Set

207. What is Bandwidth?

208. What is Big Data

209. Checksum

211. What is Ethernet

214. What is ROM?

216. WPF Tutorial

217. Wireshark Tutorial

218. XML Tutorial

TensorFlow Tutorial

Introduction

This guide explores TensorFlow, a Google platform for machine learning and deep neural network research. As an open-source tool, it is widely used among network developers due to its remarkable flexibility and robustness. This TensorFlow tutorial will present the fundamental principles and components of TensorFlow.

Overview

TensorFlow is a complete platform for building machine learning models. It includes the entire data ingestion, training, evaluation, deployment, and monitoring workflow. TensorFlow computes utilizing data flow graphs, with nodes representing mathematical operations and edges representing multidimensional data arrays (tensors) that flow between operations.

TensorFlow enables developers to distribute computations over one or more CPUs/GPUs, as well as mobile platforms and web browsers. For complicated models and datasets, the adaptable architecture enables scaling up to hundreds of GPUs and thousands of workstations.

TensorFlow was created by Google Brain team researchers to undertake machine learning and deep neural network research. It was later made available as an open-source library.

Popular Libraries for Deep Learning

Some of the most popular open-source deep learning frameworks today include:

- TensorFlow - Created by Google, supports Python, C++, Java, and Go. Very flexible architecture.

- PyTorch - Programmer-friendly framework created by Facebook, uses Python APIs. More

- MXNet - Supports Python, C++, R, Scala, and Julia. Optimized for efficiency and speed.

- Microsoft Cognitive Toolkit (CNTK) - Supports Python, C++, and computational networks like CNN and RNN.

- TensorFlow Keras - A high-level Python library that can run on TensorFlow, CNTK, or Theano.

What is TensorFlow?

TensorFlow is an open-source library for artificial intelligence and deep learning applications. Google Brain developed TensorFlow, which uses data flow graphs to represent the computation, sharing, and reuse of machine learning models.

Some key features of TensorFlow include:

- TensorFlow provides a flexible architecture that allows computation to be deployed across platforms like CPUs, GPUs, TPUs, mobile devices, and web browsers through TensorFlow Lite and TensorFlow.js.

- Programming languages supported include Python, C++, Java, Go, JavaScript, and Swift through the available APIs.

- Visualization and debugging of TensorFlow graphs are enabled by TensorBoard.

- Computations can be distributed across multiple CPUs and GPUs. Automatic differentiation optimizes computations.

- TensorFlow is open-source software released under the Apache 2.0 license. This allows the TensorFlow platform to be freely used, modified, and distributed.

The main components of TensorFlow include:

- The tf.data API, which allows the creation of scalable input pipelines to load and prepare data for modeling.

- Estimator implementations provide high-level neural network application programming interfaces for steps like training, evaluation, and prediction.

- Keras is a user-friendly API that enables rapid prototyping and iteration for deep learning models.

What is a Tensor?

A Tensor is an array or list with several dimensions. It is the fundamental data structure of TensorFlow. The term "tensor" refers to a mathematical connection represented by an item. Consider the following Tensor forms and ranks:

- A 0-D Tensor is a scalar value, such as 5 A.

- 1-D Tensors are vectors with n elements, such as [2, 3, 5].

- A 2-D Tensor is a n x m matrix having the elements [[1, 2, 3], [4, 5, 6]].

- A 3-D Tensor is a 3D array having n x m x r elements, similar to a number cube.

Tensors enable the consistent representation of data from images, text, audio, video, and other sources for machine learning modeling. Let's go on to the tensor rank.

Tensor Rank

The rank refers to the number of dimensions a Tensor has. Here are some examples:

- A scalar value would have rank 0

- A vector with n elements would be rank 1

- A matrix with n x m elements would be rank 2

- An RGB image with width x height x 3 channels would have rank 3

- A sequence of video frames with width x height x frames x channels would have rank 4

So higher rank tensors can represent higher dimensional data like sequences of images and videos. Rank 4 tensors are very commonly used for machine learning with visual data.

Lower-rank tensors like vectors and matrices are useful for representing word embeddings and numeric tabular data.

Tensor Data Type

Tensors can represent data of different types. The main data types used are:

- float32 - The default data type used; 32-bit floating point.

- int32 - 32-bit signed integer

- string - variable length string

- bool - Boolean value

- complex64 - Complex number with 32-bit floating point real and imaginary parts

For computer vision, float32 is commonly used since it provides sufficient precision for storing pixel values. For NLP, strings are used to store text, while float32 is used for representations like word embeddings.

The ability to handle multiple data types allows TensorFlow to be flexible. Next, let's look at how TensorFlow represents computations.

Building a Computational Graph

TensorFlow uses a computation graph to represent all the operations that need to be performed for machine learning. The graph nodes are operations, while edges are tensors flowing between them.

For example, a graph for a simple linear regression would look like this:

The placeholders allow injecting external data into the graph as input tensors. The matmul (matrix multiply) operators represent the matrix math operations performed.

This graph-based representation enables parallelization - individual nodes can execute as soon as inputs are available, unlike a sequential script.

We can build up complex neural networks by composing many simple operations like matrix multiplies, convolutions, activations, etc. The TensorFlow engine optimizes the execution.

Programming Elements in TensorFlow

The core TensorFlow library provides a Python API. Here are some key classes and functions:

- tf.Tensor - Class to represent data as tensors

- tf.Variable - Class to represent modifiable parameters

- tf.function - Decorates functions to execute as TensorFlow graphs

- tf.data.Dataset - Represents collections of elements for input pipelines

- tf.keras - High-level APIs for building neural networks

To run a computation, the graph is launched within a tf.Session. The session places graph operations on CPUs/GPUs and runs the computations.

Introduction to RNN

Recurrent Neural Networks (RNNs) are neural networks specialized for processing sequential data like time series, text, video, audio, etc. RNNs maintain an internal state that allows them to process arbitrary length sequences.

Some key characteristics of RNNs:

- Used for sequence modeling tasks like language translation, speech recognition, and sentiment analysis.

- Maintain an internal hidden state that captures past context.

- The hidden state is updated based on new input at each time step.

- Outputs are calculated based on current input and stored context in a hidden state.

There are different types of RNN architectures. Let's examine them next.

Types of RNN

There are a few common types of RNN architectures:

One-to-one

A simple RNN with a single hidden state passes from one step to the next. Suitable for simple sequence tasks.

One-to-many

An RNN with multiple layers stacked together, with data flowing from one layer to the next. Can capture more complex relationships.

Many-to-one

An RNN with multiple inputs converging to a single output. Useful for tasks like sentiment classification.

Many-to-many

Bidirectional RNNs with connections between hidden states in both directions allow context from both the past and the future.

The most commonly used form is a stacked RNN with multiple layers (one-to-many). The multi-layer RNN can capture hierarchical patterns in sequential data.

Next, let's go through a real example of implementing an RNN for a language modeling task.

Use Case Implementation of RNN

Let's walk through an example RNN model in TensorFlow for a language modeling task:

1. Import TensorFlow and helpers:

import tensorflow as tf

from tensorflow.keras.layers import Embedding, SimpleRNN

import tensorflow as tf

from tensorflow.keras.layers import Embedding, SimpleRNN

2. Prepare training text data and map characters to indices:

text = "this is sample text for training"

vocab = sorted(set(text))

char2idx = {u:i for i, u in enumerate(vocab)}

idx2char = np.array(vocab)

text = "this is sample text for training"

vocab = sorted(set(text))

char2idx = {u:i for i, u in enumerate(vocab)}

idx2char = np.array(vocab)

3. Define model hyperparameters:

vocab_size = len(vocab)

embedding_dim = 16

num_rnn_units = 64

vocab_size = len(vocab)

embedding_dim = 16

num_rnn_units = 64

4. Build RNN model in Keras:

inputs = tf.keras.Input(shape=(None,))

x = Embedding(vocab_size, embedding_dim)(inputs)

x = SimpleRNN(num_rnn_units)(x)

outputs = tf.keras.layers.Dense(vocab_size)(x)

model = tf.keras.Model(inputs, outputs)

inputs = tf.keras.Input(shape=(None,))

x = Embedding(vocab_size, embedding_dim)(inputs)

x = SimpleRNN(num_rnn_units)(x)

outputs = tf.keras.layers.Dense(vocab_size)(x)

model = tf.keras.Model(inputs, outputs)

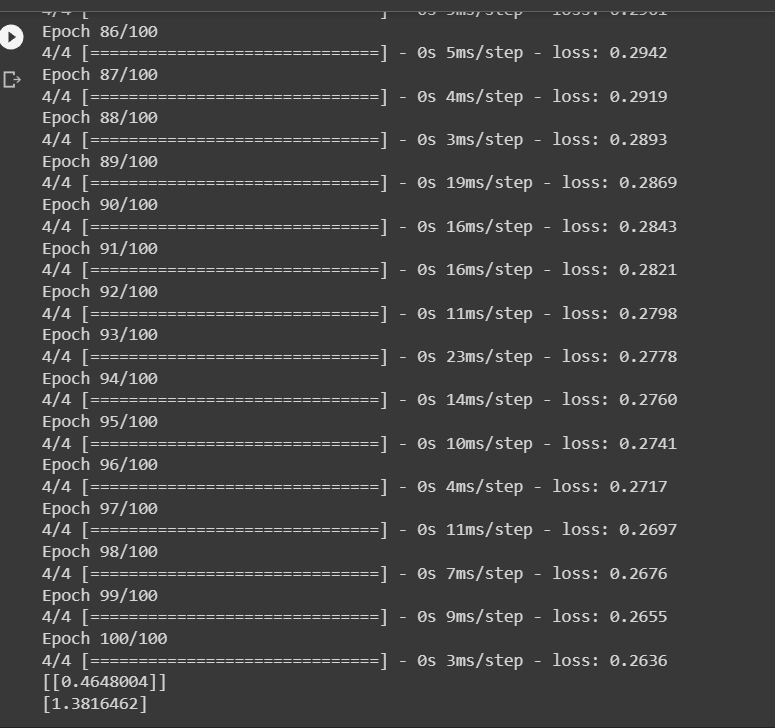

5. Compile and fit the model:

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy')

history = model.fit(X_train, y_train, epochs=100)

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy')

history = model.fit(X_train, y_train, epochs=100)

This builds an Embedding > RNN > Dense model to take character sequences and predict the next character. The same pattern can be extended to sequence tasks like translation, video analysis, etc.

Code examples

import tensorflow as tf |

import tensorflow as tf

from tensorflow import keras

import numpy as np

# Generate data

X_train = np.random.rand(100, 1)

y_train = 2 * X_train + np.random.rand(100, 1)

# Define input layer

inputs = keras.Input(shape=(1,))

# Define model layers

output = keras.layers.Dense(1, activation='linear')(inputs)

# Compile and train model

model = keras.Model(inputs, output)

model.compile(loss='mean_squared_error', optimizer=keras.optimizers.SGD(0.01))

model.fit(X_train, y_train, epochs=100)

# Print trained parameters

weights, bias = model.layers[1].get_weights()

print(weights)

print(bias)

This implementation constructs a basic linear regression model of the form y = Wx + b and leverages TensorFlow for training to forecast y values using input data X. Key facets entail employing the tf.Variable and tf.placeholder constructs to define Tensors, building model operations like matmul, add, and reduce_sum to specify mathematical functions, executing a training loop while supplying data dictionaries to minimize the loss, and retrieving the trained parameter values for the weights W and bias b.

Overall, the code snippet demonstrates core techniques like defining TensorFlow Tensors, building model operations, training models by optimizing a loss function, and extracting trained parameters.

Output:

Conclusion

This TensorFlow tutorial covered the basics like tensors, tensor rank, tensor data types, computation graphs, core TensorFlow API elements, linear regression examples to predict numeric values, and recurrent neural networks like SimpleRNN for sequence data.

TensorFlow makes it possible to express arbitrary computations as graphs and train machine learning models efficiently. It provides the flexibility to deploy across platforms, from mobile to the cloud.

The TensorFlow examples showing end-to-end regression and RNN models prove how TensorFlow can be used for real-world deep learning tasks and provide a solid basis to start using it for your machine learning projects.

FAQs

1. What languages does TensorFlow support?

TensorFlow APIs are available in Python, C++, Java, Go, Swift, and JavaScript, among which Python is most commonly used.

2. Tensorflow vs. PyTorch: Which is better?

TensorFlow uses static graphs, while PyTorch uses dynamic graphs. TensorFlow has more low-level control, while PyTorch has a more Pythonic approach. Both are excellent deep-learning frameworks.

3. Can I use TensorFlow for classical ML tasks?

Yes, TensorFlow provides APIs like tf.estimator and Keras that can be used to implement models like linear regression, SVMs, etc. that go beyond deep learning.

4. Does TensorFlow only run on GPUs?

No, TensorFlow can leverage GPUs for acceleration but can run models on CPUs as well. TensorFlow Lite and TensorFlow.js allow deployment on mobile devices and web browsers.

5. What are the limitations of TensorFlow?

TensorFlow can have a steep learning curve. Debugging and troubleshooting TensorFlow code can be difficult. It is slower than frameworks like PyTorch for rapid prototyping.

Author

upGrad Learner Support

Talk to our experts. We are available 7 days a week, 9 AM to 12 AM (midnight)

Indian Nationals

1800 210 2020

Foreign Nationals

+918068792934

Disclaimer

1.The above statistics depend on various factors and individual results may vary. Past performance is no guarantee of future results.

2.The student assumes full responsibility for all expenses associated with visas, travel, & related costs. upGrad does not provide any a.